Optimized Time Zone Location Lookup in PostgreSQL

Background

I have a dataset where users are allowed to add “hours of operation”, but for some reason, they do not provide time zone information. This is great if the client viewing the point is local and understands the time zone, but this is a national project.

Data Sources

OpenStreetMap

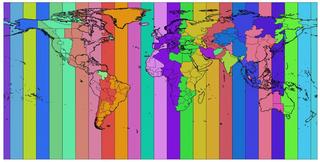

OpenStreetMap seems to maintain the best time zones that are publicly available with the timezone key. OpenStreetMap documentation suggests editors to:

Use timezone=* to specify which IANA time zone database time zone applies.

A developer, Evan Siroky, is apparently a big fan of mapping time zone boundaries. Evan created a node.js tool to extract the time zones directly out of OpenStreetMap. There are even pre-made time zone files in GeoJSON and Shapefile formats for those looking to just get the data quickly.

PostgreSQL

PostgreSQL has the IATA (Olson) Time Zone database built in (as described here). This is the same time zone list that is suggested by OpenStreetMap, so things should line up (spoiler: they don’t always).

Process

Clone and run boundary builder

Normally, I would just use the GeoJSON file, but since I’m documenting this, I might as well run the full process.

Install Node.js / git / gdal / zip

Also, since I’m documenting it, I’m assuming the use of a fresh Ubuntu machine (or image) that doesn’t have anything installed yet. (I borrowed the nodesource script documentation from DigitalOcean)

curl -sL https://deb.nodesource.com/setup_16.x -o nodesource_setup.sh

nano nodesource_setup.sh

At this point, it’s always important to look around in the nodesource file to make sure it’s what you want to do. Once you do that, you can continue.

sudo bash ./nodesource_setup.sh

sudo apt-get update && sudo apt-get install -y \

nodejs \

git \

gdal-bin \

zip

mkdir ~/projects

cd ~/projects

git clone https://github.com/evansiroky/timezone-boundary-builder

cd ~/projects/timezone-boundary-builder

npm install

Run the time zone boundary builder

The README for the library is pretty great, so you can look there for all the details. This is the command I used to get a GeoJSON file that I will import into PostgreSQL.

node \

--max-old-space-size=8192 \

index.js \

--skip_analyze_diffs \

--skip_shapefile \

--skip_zip \

--downloads_dir ./downloads \

--dist_dir ./dist

This loops through every time zone in the osmBoundarySources.json file, downloads that time zone from the Overpass API, and waits 4 seconds between each time zone. There are 470 time zones in that file, so that means this process will take at least 4 * 470 = 1800 seconds (or 30 minutes) to complete. There is a status indicator, and it says the process will take about 2 hours, which also includes the time to download the data from Overpass. I get a lot of 429: Too Many Requests issues so it’s taking some time.

Wait 🕑

I took this time to write up my process so far in Markdown, refill my tea, and work on other projects.

Load into PostgreSQL

The final GeoJSON file will be called ./dist/combined.json

Gdal was already installed with the apt-get command above, so ogr2ogr should be installed installed.

You can read all about the switches I used on the GDAL PostgreSQL Driver documentation.

Make sure to change the host, user, dbname, and password fields in the PG line, or use environment variables.

ogr2ogr \

-f "PostgreSQL" \

PG:"host=myhost user=myloginname dbname=mydbname password=mypassword" \

./dist/combined.json \

-lco OVERWRITE=yes \

-lco DIM=2 \

-lco SPATIAL_INDEX=GIST \

-lco GEOMETRY_NAME=the_geom \

-lco FID=id \

-nlt PROMOTE_TO_MULTI \

-nln osm_timezones

Make sure the new Data matches PostgreSQL timezones table

PostgreSQL contains a table called pg_timezone_names that contains the current offset for every time zone. These should match up, but since we don’t always keep out PostgreSQL databases or OSM extracts current, there may be gaps. I try to avoid these situations, and you can test it with a query like this:

SELECT tzid, now() at time zone "tzid" from osm_timezones;

If you get an error, the easiest way to prevent issues is to add a column that lets you filter out the time zones that don’t match. You really shouldn’t have to do this as long as everything is up-to-date, but it never hurts to check.

ALTER TABLE osm_timezones ADD COLUMN pg_timezone BOOLEAN;

WITH matched_timezones AS (

SELECT

pg_timezone_names.name IS NOT NULL AS matched,

osm_timezones.tzid

FROM osm_timezones

LEFT JOIN pg_timezone_names ON pg_timezone_names.name = osm_timezones.tzid

)

UPDATE osm_timezones

SET pg_timezone = matched_timezones.matched

FROM matched_timezones

WHERE matched_timezones.tzid = osm_timezones.tzid;

Find a point in a Polygon

Test out the code to make sure it works. I like running across the 40th parallel, at 15° at a time, since it cuts through some populated areas.

- -75° is New Jersey (Cinnaminson / Philly Suburbs)

- -90° is Illinois (Roughly Springfield)

- -105° is Colorado (North of Denver at ‘Baseline’ road, which follows the 40th parallel)

- -120° is California (at the NV border)

SELECT tzid

FROM osm_timezones

WHERE ST_Contains(the_geom, ST_SetSRID(ST_MakePoint(-75, 40), 4326))

These queries are running pretty quickly for me (around 2.0 ms) but there are things we can do to speed it up.

Optimizing the Queries

The ST_Contains function runs a point in polygon function on all the polygons that may contain the single point. These time zone polygons can be quite large, for example, the America/New_York time zone contains 32244 vertices.

Somewhere deep in the PostgreSQL code, it is looping through each of those 32,244 points to make sure that your point is contained within that polygon. PostgreSQL is smart and can optimize this a little internally, and the gist spatial index we added with ogr2ogr command helps a lot too.

We can help PostgreSQL out a little by splitting up our polygons into ones with less points in them, basically breaking up something like the New York time zone into a bunch of smaller polygons that each contain less points. This is where the ST_Subdivide function comes in.

In order to do this, I am going to split up the time zones into polygons with no more than 255 points each, by running this query:

CREATE TABLE osm_timezones_split AS

SELECT id, tzid, ST_Subdivide(the_geom, 255) AS the_geom

FROM osm_timezones WHERE pg_timezone = true;

It will take a while, so you can go work on something else while it processes. It took me 2 minutes 11 seconds to run. I chose 255 because it’s the default, I’m sure there’s a good balance of vertices in a polygon to amount of polygons.

After than finishes, make sure to add a spatial index to the new table:

CREATE INDEX

osm_timezones_split_the_geom_idx ON

osm_timezones_split USING GIST (the_geom);

Run this query on the new table, and you should see an improvement:

SELECT tzid

FROM osm_timezones_split

WHERE ST_Contains(the_geom, ST_SetSRID(ST_MakePoint(-75, 40), 4326))

I am getting around 0.8 ms per query now!

Testing on a larger dataset

For a larger test, I am going to join all the time zones for the USGS Structures dataset, with 445,921 records.

SELECT

usgs_struct.name,

tzid

FROM usgs_struct LEFT JOIN osm_timezones_split ON

ST_Contains(osm_timezones_split.the_geom, usgs_struct.the_geom);

Without subdividing the dataset, I was able to join the two datasets in 1090377.223 ms (18 minutes). With the subdivided database, it took 5038.160 ms to join the dataset.

Oddities

I found that if I don’t do the LEFT JOIN (just use a JOIN instead), that it runs much quicker, in fact the osm_timezones JOIN runs in about 3 seconds, which is 2 seconds faster than the left join with the osm_timezones_split table. In theory, each point should only fall into one time zone, so there is really no need for a nested loop left join. I’ll have to look into this further.